The Imagination Gap

Using AI to See Through Someone Else’s Eyes: Validating Alt-Text with Image Generation

Alt-text is one of the simplest accessibility practices to describe, and one of the hardest to do well. It asks us to translate an image into language so that someone who cannot see the image can still understand it. But between what we intend and what someone else understands lies a space where the meaning can drift.

That space is what I call the Imagination Gap—the distance between the mental image a writer believes they are conveying and the one a reader constructs from the words alone.

AI image generation offers a novel way to make that gap visible.

By regenerating an image solely from the alt-text written to describe it, we can examine how much meaning is preserved, how much is lost, and where ambiguity lives. The goal is not to automate alt-text or replace human expertise; it is to reveal the limits of our language so we can write with greater clarity and intention.

What Alt-Text Actually Is

For readers outside the accessibility space:

alt-text is a written description of an image that can be read aloud by assistive technologies such as screen readers. It ensures that people who cannot see the image still have access to the same information.

Good alt-text is not simply a list of objects; it conveys the essential meaning of the image.

But meaning is subjective. And when we describe an image, we are guided by our own assumptions, visual context, and background knowledge—none of which the reader necessarily shares.

The Imagination Gap

When we write alt-text, we picture the original image in our minds.

We already know what the notebook looks like, what the person in the photograph is doing, what the brand colors mean, what the expression on a face implies.

But the reader does not.

They receive only the words.

And words, even simple ones, are incomplete.

If I say “a red notebook,” what do you imagine?

- Bright red? Deep red? Brown-red?

- Hardcover? Softcover? Leather? Cloth?

- Large? Small? Tall? Wide?

- New? Worn? Handmade? Factory-made?

Every reader fills in the blanks differently.

This is the Imagination Gap—the invisible distance between the writer’s intention and the reader’s interpretation. It is especially critical in accessibility, where clarity is not a luxury but a necessity.

Why Human Validation Isn’t Enough

We often assume the solution is simple: ask blind users to validate alt-text.

But this is unfair and unsustainable.

Blind reviewers are constantly asked to validate, test, and correct accessibility work—often unpaid and often at scale. And even when human review is in place, communication bias creeps in. A reviewer may imagine something different than what the author intended but describe it in a way that masks the misunderstanding. Or they may not know which visual details the author considered essential.

Human review is invaluable, but it is not exhaustive.

The artist knows the image in their mind.

The reviewer knows the image in theirs.

Neither can see the gap between them.

AI image generation offers a third perspective—one that exposes ambiguity without taxing the community we are trying to support.

The Insight: AI as a Reverse Alt-Text Engine

An image generator can serve as a literal interpreter of your description.

If you give it only your alt-text, without the original image, and ask it to generate exactly what the text describes, the output reveals how your words function when stripped of context.

When I first attempted this, I had almost no experience with image generation tools. I didn’t even realize one was built into ChatGPT. I simply explained what I wanted to test, and the model suggested I prepend my prompt with the following instruction:

“Generate a literal, documentary-style image based strictly on this description. Do not add or infer artistic elements beyond what is explicitly stated.”

That phrasing forces the model to treat your text like documentation rather than inspiration.

It mirrors the purpose of alt-text: factual, unembellished communication.

With modern models, the exact wording matters less than the principle: ask the AI to interpret your alt-text literally.

This reveals where the Imagination Gap appears.

The Method

The workflow is simple:

- Write your alt-text normally.Describe the image as you intend it.

- Copy only the text.No filenames, captions, or visual hints.

- Paste it into the image generator with a literal-interpretation instruction.

- Compare the generated image to the original.Where does it match?

Where does it drift?

What details did you assume were obvious? - Refine the alt-text.Add clarity where ambiguity appeared.

- Generate the second reconstruction.Confirm whether the meaning is now more faithfully conveyed.

This process takes minutes—and the insight is immediate.

A Demonstration: Closing the Imagination Gap Through Iteration

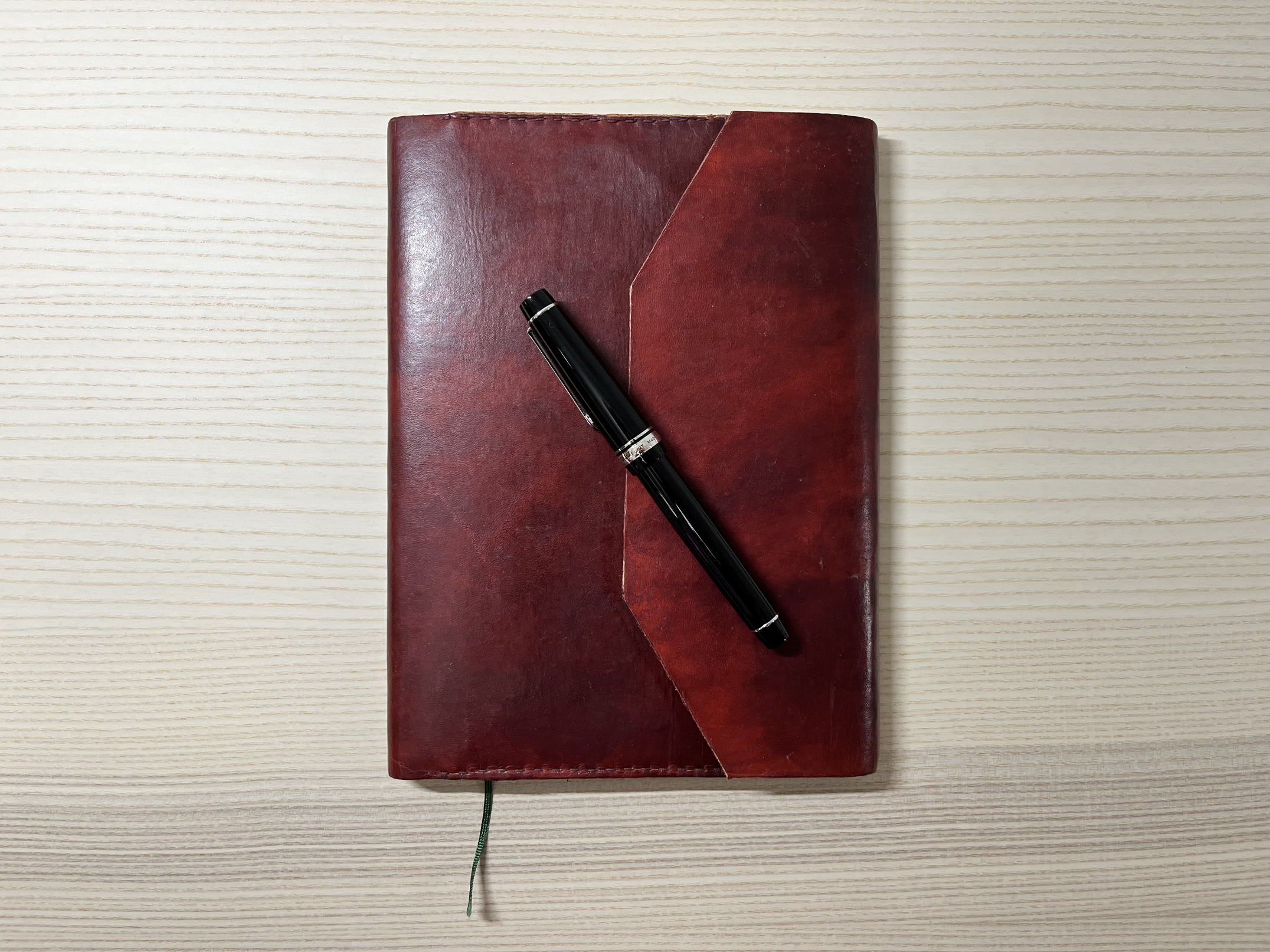

To illustrate how this works, I staged a simple photograph using objects from my own desk:

a handmade red leather notebook cover and a black fountain pen.

At first glance, it’s an uncomplicated scene.

But simplicity often hides ambiguity.

Original image:

“A handmade red leather notebook cover lies closed on a light wood surface. The cover is dyed in varied deep red and brown tones and features a distinctive asymmetrical front flap that folds over from the right side. A black fountain pen with silver accents rests diagonally across the notebook from upper left to lower right. The leather shows visible texture and natural tonal variation, with subtle highlights from the overhead lighting.”

This is the authoritative description used for comparison in the alt-text reconstruction examples below.

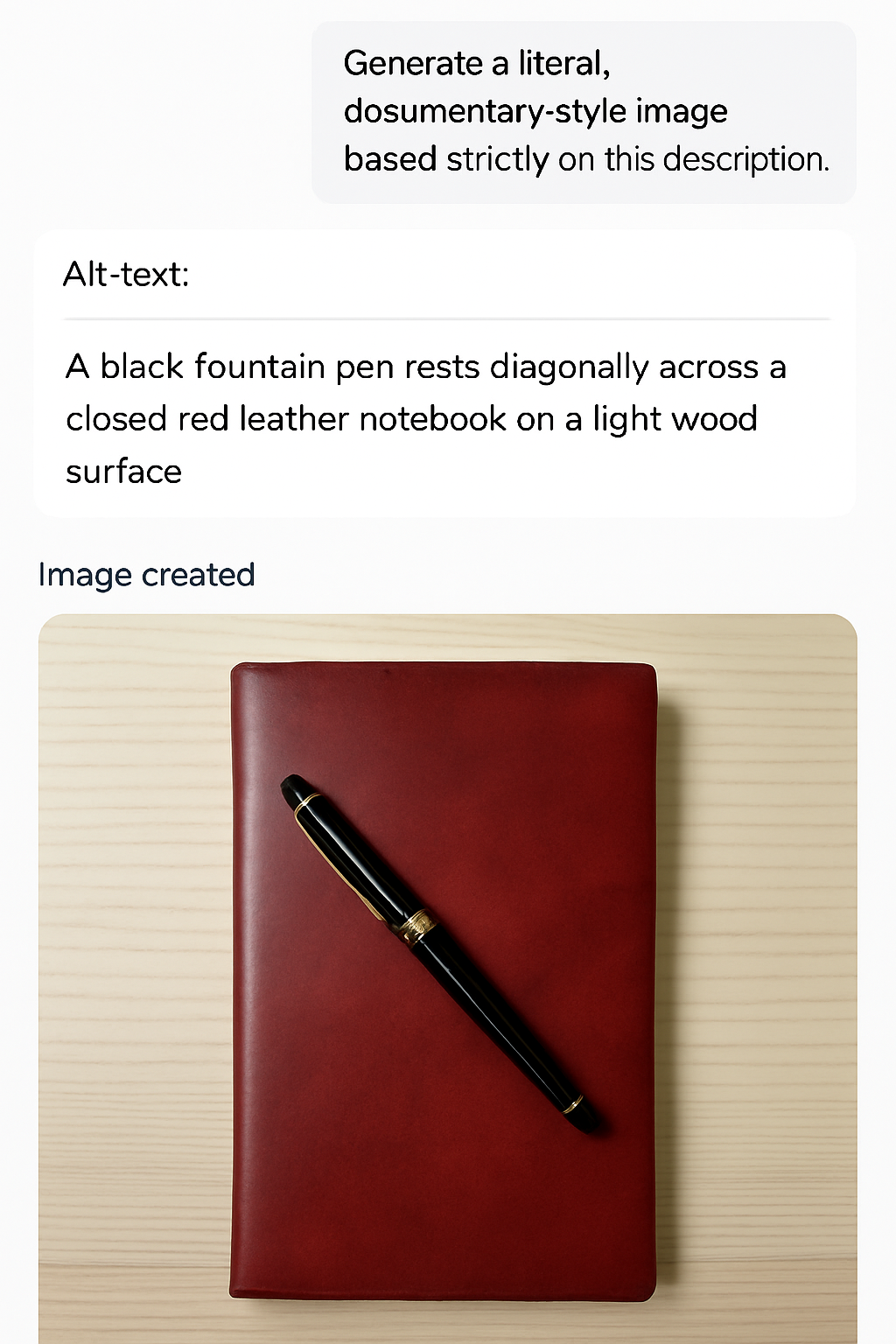

Version 1: A Reasonable First Attempt

Alt-text (Version 1):

“A black fountain pen rests diagonally across a closed red leather notebook on a light wood surface.”

This description is common: correct in a general sense, serviceable, and typical of what appears on product pages and blogs.

AI reconstruction (Version 1):

The result is surprisingly close—but not quite right.

The model interpreted the scene as:

- a smooth, symmetrical commercial notebook

- flat red leather with no tonal variation

- a background whose wood grain differs subtly

- proportions that don’t quite match

- a pen orientation that is approximate rather than specific

These are not mistakes—they are interpretations in the absence of explicit detail.

This is the Imagination Gap made visible.

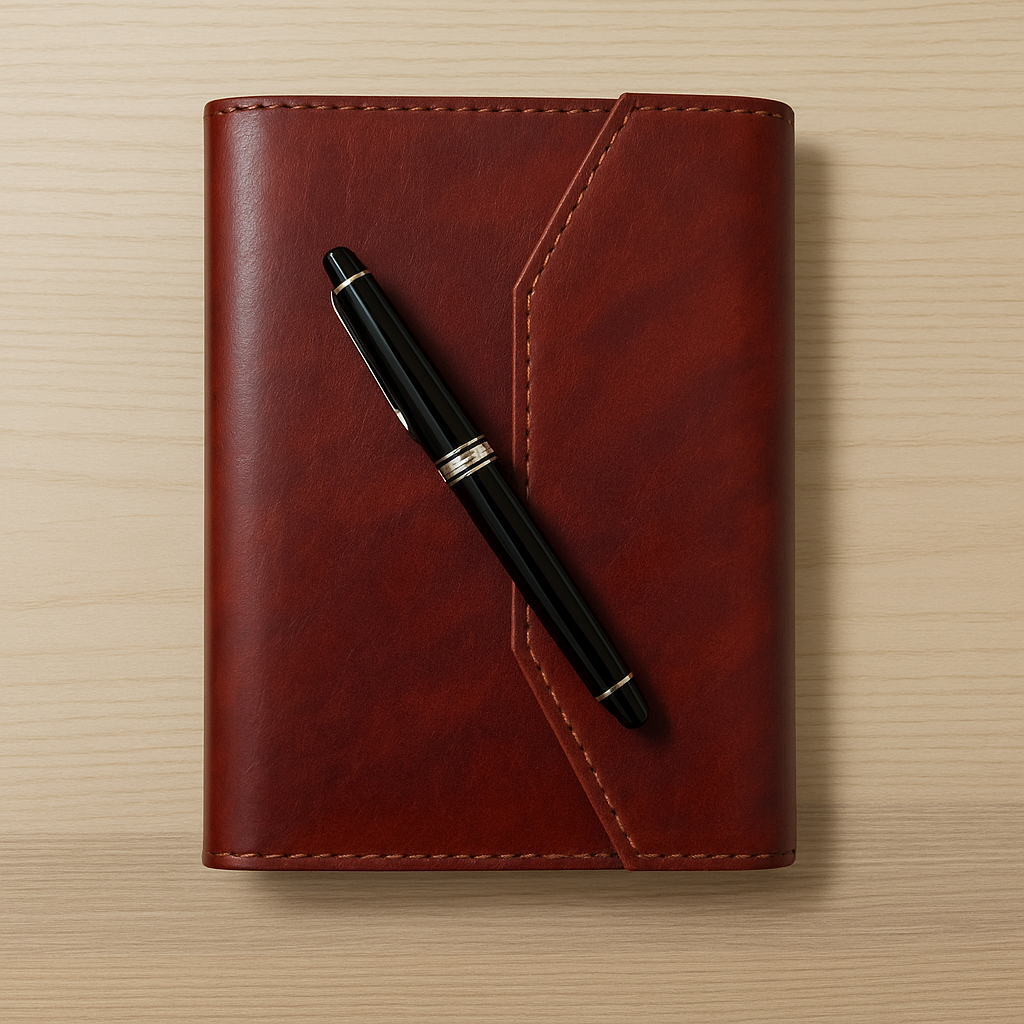

Version 2: Clarifying Intention

Using what the reconstruction revealed, I rewrote the alt-text more intentionally:

Alt-text (Version 2):

“A handmade red leather notebook cover with an asymmetrical front flap lies closed on a light wood table. The leather shows deep red and brown tones with a natural waxed sheen. A black fountain pen with silver accents rests diagonally across the center of the notebook.”

AI reconstruction (Version 2):

Immediately, we see:

- the asymmetrical flap appears

- the leather shows richer tonal variation

- the sheen is more accurate

- proportions are closer

- the pen is centered and clearly oriented

It is still not identical to the original—and it never should be.

Language cannot encode the exact experience of hand-dyed leather or the tactile nuance of craftsmanship.

But the distance between intention and interpretation has narrowed significantly.

That is the purpose of this exercise.

Ethical Considerations

AI cannot replace blind users.

Human judgment is irreplaceable, especially when describing emotion, context, or cultural meaning.

AI also carries the biases and limitations of its training data. A divergence may reflect ambiguity in the text—or bias in the model.

This method should reduce, not increase, the burden on blind reviewers by helping writers resolve avoidable ambiguity before passing content along for human validation.

Transparency matters: this technique is a tool for clarity, not automation.

Alt-text must remain an act of human communication.

Why This Matters for Humane Systems Design

Accessibility is often framed as compliance.

But humane systems design asks a deeper question:

Does this system create harmony between intention and experience?

Alt-text sits at the intersection of language, perception, and meaning. When it fails, it reveals where a system assumes sight is universal. When it succeeds, it becomes an act of inclusion.

Using AI in this way transforms alt-text from an afterthought into an intentional moment of clarity. It externalizes the assumptions we make unconsciously, allowing us to see our own language with new eyes.

AI is not the author.

It is the mirror.

By reflecting what our words truly communicate, it helps us design with care, precision, and empathy.

It turns ambiguity into awareness.

It reveals the system behind the surface.

And sometimes, in a small way, it creates harmony.

Conclusion

Alt-text asks us to describe what we see so that someone else can understand it. But understanding is never guaranteed. Between intention and experience lies the Imagination Gap.

Using AI to reconstruct an image from alt-text doesn’t close that gap—it illuminates it. It shows us the interpretive space our words create. It reveals where meaning drifts. And it allows us to refine our language with greater intention.

This method is not about automation or efficiency.

It is about awareness.

By making ambiguity visible, we can write alt-text that is clearer, more honest, and more human. We can respect the cognitive labor of blind users. And we can build digital systems that better align intention with experience.

A small act of harmony in a system that touches millions every day.